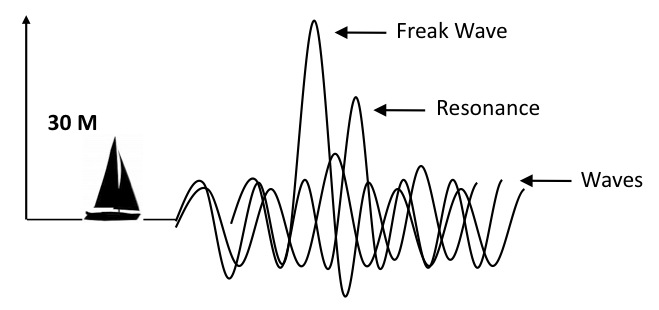

Freak Waves ( also referred to as Rogue Waves ) may appear randomly, under particular atmospheric pressure fields and wind patterns and reach more than 30 meters from trough to crest, whereas most ships are designed to withstand waves of 15 meters. Severe storms usually generate waves in the order of 12 meters.

Decades ago, freak waves were a myth, a legend as no ship and crew had survived a freak wave encounter. Reports of surviving crews and damages observed on surviving ships have progressively convinced scientists that freak waves do effectively exist.

Moving with the swell, a freak wave grows by borrowing energy from adjacent waves, thus picking up height and energy from the combination of several standard waves.

The Freak Wave in aviation

.

French-English translation : Michel TREMAUD

As a metaphor, the Freak Wave represents an extreme hazard likely to result in an accident.

Several models are available to analyse aviation accidents.

The first and simplest model is called the “domino effect”, whereby the accident is simply explained by the successive occurrence of events linked together by a cause-to-effect relationship. This is a linear / sequential model that is far too basic to explain the complexity of aviation accidents.

More elaborated, the model developed by James Reason analyses latent failures ( also referred to as latent pathogens ) that may combine together and lead to an accident, despite safety barriers offered by training, technology, … . This model focuses on error, but not solely on the error by the front-line operator ( who is only the last link in the error chain ). This is a global approach but it still is a linear process ; however, it provides a fairly accurate understanding of the causes of accidents affecting complex systems.

Finally, Erik Hollnagel proposed a systemic model which describes an accident as the result of complex interactions between the various components of a human activity. The accident is then the consequence of coinciding events that, linked to each other, enter in resonance : such a diverging phenomenon exists in nature, this is the Freak Wave ( or Rogue Wave ). Hollnagel’s model cannot be broken down and is not linear. Its main interest for the aviation community is the fact to consider the time scale as a most important element of understanding in the analysis of accidents.

Many similarities exist between the onset of a Freak Wave and the chain of events – specific to our own activity – that leads to an accident : there are many components, most of them cannot be acted upon, each component varies with time in accordance with its own natural frequency. These components may or not combine each other ( i.e., may or not enter in resonance ).

A study ( Archimède experiment, Jean-Claude Wanner ) has demonstrated that such resonances can be reproduced into diverging flight scenarios that would have been otherwise challenging but manageable. At then end of the experiment, several volunteer flight crews left the flight simulator still under the disbelief that they simply had crashed : ” How come could one be trapped under such circumstances ? “, as one pilot put it.

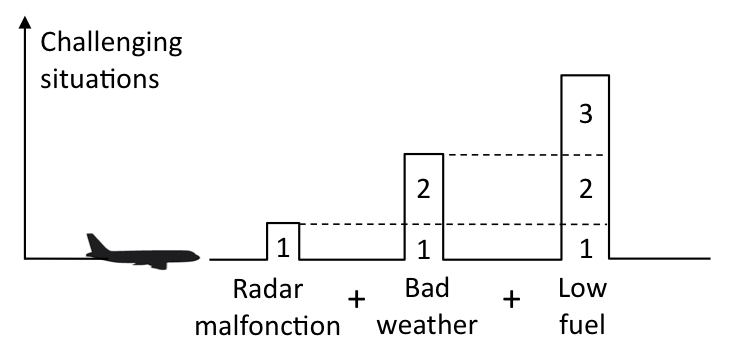

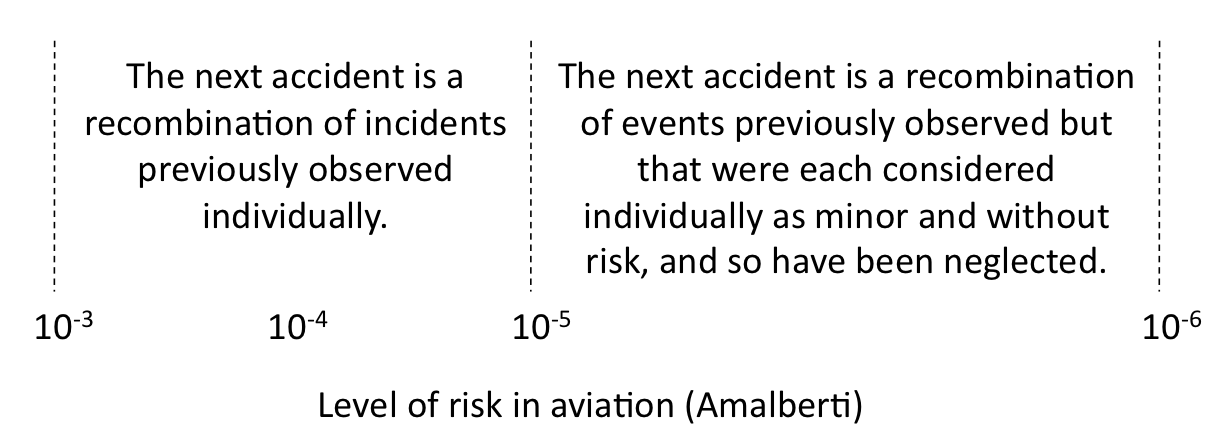

The illustration below reflects a situation that is far from being unrealistic from a probability standpoint, considering the safety level that is expected in daily operations.

Factors may combine together, some may self-dampen and some may diverge. The number of possible scenarios depends upon the number of controlling elements : with 4 elements, resulting in 6 links, there are 64 possible scenarios. As a measure of comparison, with 10 controlling elements, thus 45 links, the number of possible scenarios is as high as 3.5 billions.

Indeed, for N elements, the number of links is L = N x N (N -1) / 2 and the number of scenarios is S = 2L.

A single component of our aviation system ( aircraft, weather, flightcrew, infrastructure, traffic, … ) features multiple elements and, correspondingly, multiple links and possible scenarios !

Here is an analysis of such combinations that may lead to an accident as a function of their probability of occurrence. One better understand that aviation safety experts such as James Reason contend that safety enhancement would better be achieved by addressing pilots’ core competencies than by attempting to reduce the number of errors at all levels.

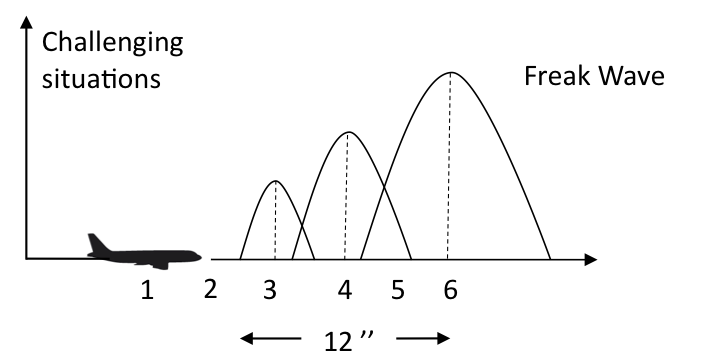

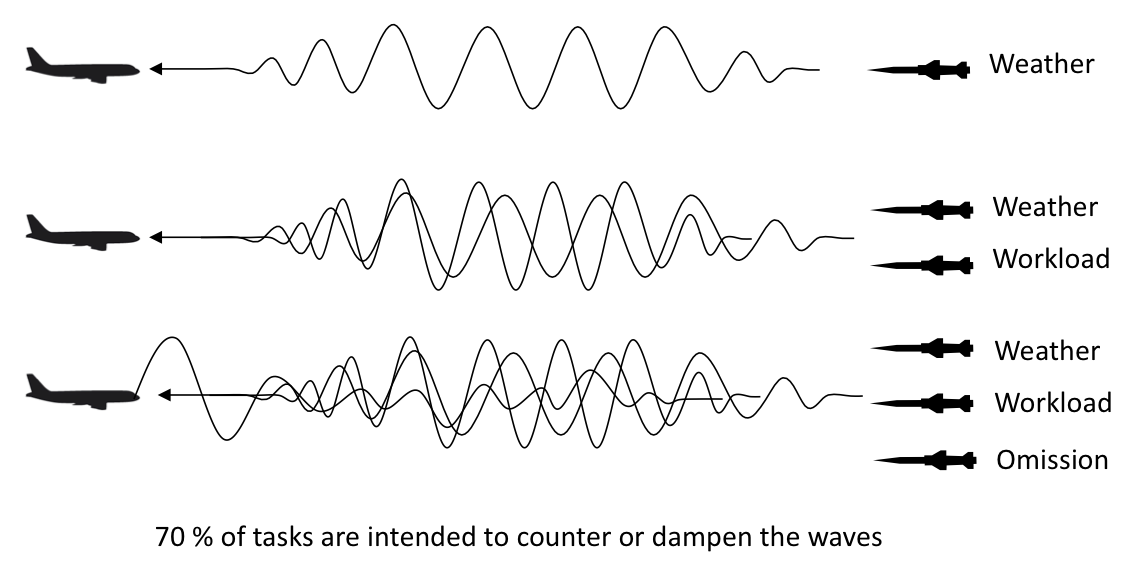

The dynamic nature of a flight is an other subtle and insidious diverging resonance that the pilot routinely faces. The crew ability to cope with competing task demands may no longer match the prevailing situation, omissions or mistakes may result from this time pressure, with cascading consequences, as illustrated by the example and illustration below ) :

1 Takeoff, cleared initially to 2000 ft with an immediate turn after takeoff and a frequency change for further clearance toward 3000 ft. Analysis : a demanding situation.

2 PF calls ” After takeoff checklist “, shortly thereafter the controller asks for the frequency change. Analysis : Overlapping crew duties and interruption of the sequence of after takeoff actions.

3 The PNF, unfamiliar with the airport, reads back an incorrect frequency. Analysis : The need for an additional communication and the frequency change further increase an already high crew workload.

4 The climb that should have been continuous is uninterrupted in the absence of a new clearance. The after takeoff actions are not complete ( i.e., flaps are not retracted ), the flaps overspeed warning is triggered at level off. Analysis : Mismatch between the crew mental model of an ideal / planned climb profile and the actual situation.

5 The PNF retracts the flaps, the speed increases while in level flight, waiting for the clearance to climb to 3000 ft. The workload is high. The crew is aware that they are no longer on the planned optimum climb profile. Analysis : Airspeed continues to increase; the crew does not perceive the impending trap as it focuses on the flaps retraction and on maintaining a level flight.

6 The clearance to climb to 3000 ft is finally obtained ; the speed gained and the resulting vertical speed when resuming the climb triggers a TCAS RA alert, as the autopilot – in altitude capture mode – transiently overshoots the selected altitude, resulting in a loss of vertical separation.

Here is an excerpt from an ICAO document : “ Checklists, briefings, standard calls and SOP’s as well as personal strategies and tactics are examples of countermeasures. Flight crews devote time and efforts in applying such countermeasures in order to maintain safety margins during the flight. Observations during training and competency checks suggest that as much as 70 % of flight crew activities are linked to applying countermeasures “

The added value of the resonance model is the integration of the space and time scales. 70 % of tasks are intended to counter or dampen the waves, as potential resonances that may adversely affect the space and time scales of the flight. The pilot must therefore remain continuously ” in tune ” with the time scale : retract the flaps on schedule ( not before / not after the flaps retraction speed ), manage systems on schedule ( as per SOP’s, not before / not after ), also in due coordination with other crewmembers, … . Margins exist to accommodate the time scale, particularly during high workload phases that are also the most accident-prone ( such as takeoff, approach-and-landing ).

A study reveals that 6 main factors are observed in most accidents : attention / monitoring errors, routine errors, omissions and mistakes in demanding situations, non-normal procedures incorrectly performed, inadequate response to unexpected conditions, non-adherence to published procedures.

This study analysed conditions that resulted in such errors and deviations. High workload is cited as resulting in a loss of attention and in an incorrect prioritization of tasks. In 50 % of observed cases, one or more decisions that would have been expected were not taken. In 2/3 of surveyed accidents, a fault or failure of an aircraft equipment is also reported ( the importance of such a fault or failure is not so much the criticality of the problem itself but the fact that this introduces an additional factor that adds-up to and complicates the chain of events ). The lack / loss of information or cue : erroneous information or delayed information, omitted or incorrect callouts, spurious warning, lack of data … are also often cited; the lack of experience or insufficient knowledge also is reported in 1/3 of accidents.

The study goes on to say : « When airline crews are not able to manage situations adequately, it is most often because of limitations of the overall aviation system rather than inherent deficiencies of the pilot ».

Finally, this study – in the chapter titled « Other cross-cutting factors » – states ” The combination of stress and surprise with requirements to respond rapidly and to manage several tasks concurrently, as occurred in several of these accidents, can be lethal “.

70 % ( or more ? ) of the pilot’s activity involves managing in real-time factors that naturally tend to diverge. The pilot manages a system ( understood as a socio-technical system ) that is by nature instable. The following quote well portrays this instability ” The magician performs his/her art using illusion, whereas the wire-dancer must permanently restore an equilibrium that never will be reached “.

Not acted upon in a timely manner, the combination of some factors, visible or invisible, may result in hazardous situations. The precursors of such situations are known ( specific conditions and threats ). Anticipation becomes an essential tool for managing threats in an environment where the time scale ( tempo ) often is imposed on the pilot ( this is precisely what the TEM concept – threat and error management – is all about ) . The lack or loss of vigilance, a high workload, the lack of situational awareness, the lack of identification / recognition of a complex situation, … are factors prone to affect a timely anticipation. Being on a reactive mode does not allow to effectively perceiving an impending / developing condition. A mismatch occurs between the pilot mental model and the actual situation, the threat may increase rapidly as the pilot is no longer managing competing / pre-empting task demands dictated by the situation.

Few pilots have been, are or ever will be exposed to a Freak Wave, however all of them are routinely exposed to its building process.

Arriving at dawn in Roissy, the assigned approach was a VOR / DME approach due to work-in-progress on the runway, this resulting in a significant displaced threshold with blast fences to protect workers. Visibility forecast was good but the landing would take place facing the rising sun and with some remaining morning mist ; maintenance work could only be seen at the last moment. Our own landing was uneventful but after vacating the runway we spotted a B747 that was going around only a mere few meters from the jet-blast fences. The go-around was uneventful but we could not refrain thinking that it had been a real close call.

For the B747 flight crew, the workload was high, visibility conditions were challenging, fatigue was undoubtedly taking its toll on this long haul flight crew ; the crew possibly was not aware of or did not anticipate the displaced threshold … and did not see it until the last second. After the flight, I talked to the ATC shift supervisor – who also had perceived this event as a near-accident – to stress that despites all the information and signalling set in place, such an event could easily be explained.

Happy landings

Jean Gabriel and Michel